Occasionally I post things here to help me remember all the stuff I was thinking about…

Spatialized morning coffee! I’ve been slightly obsessed lately with all the cool things you can do with SwiftUI and RealityKit… so I built myself a little gesture driven menu system that leverages Webhooks to trigger my coffee machine.

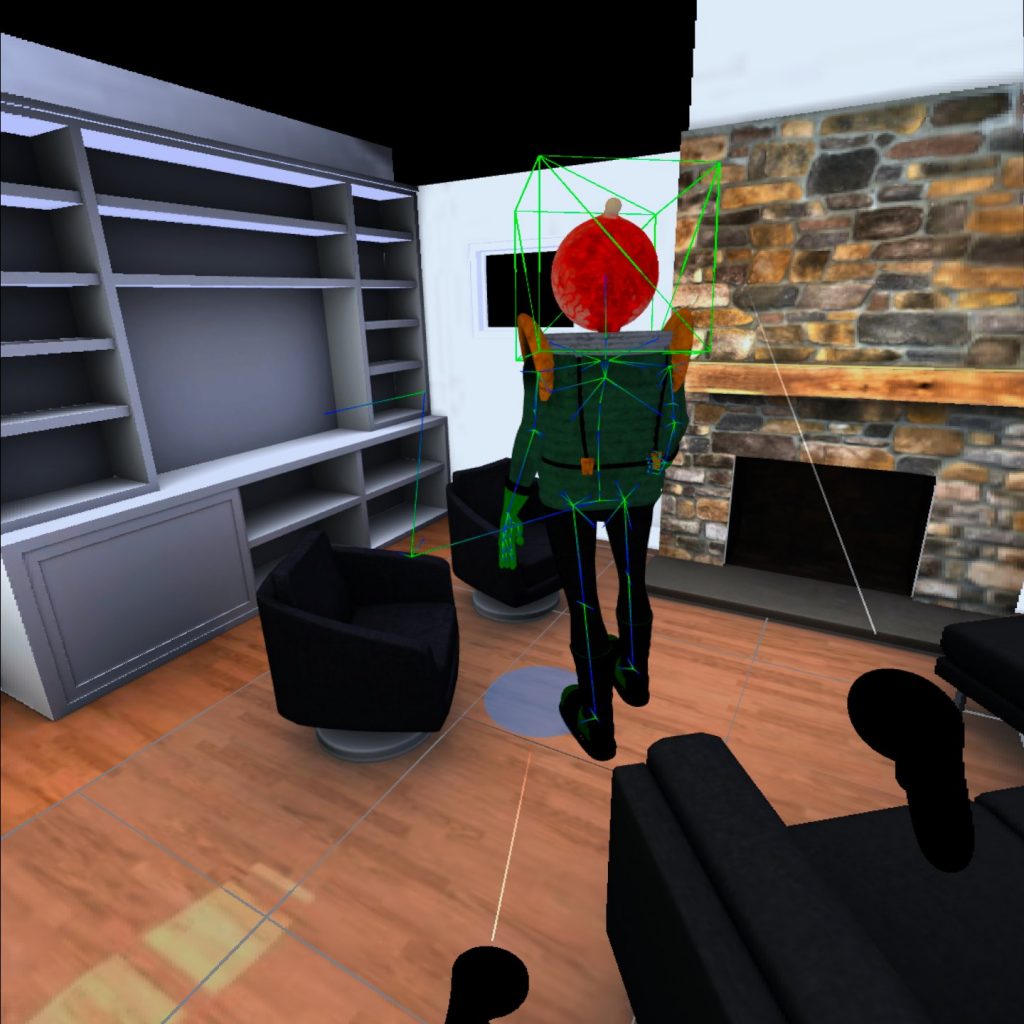

I was curious if you could stream mocopi data into AVP… turns out you can.

Made a spatial portal demo over the weekend… Converted FBX animation/assets to USDZ files. Layout and lighting in Reality Composer Pro. Xcode/RealityKit for rendering/gestures

In the early 2000’s I founded a mobile gaming startup. I wanted to create original IP for wireless devices and then spin it off into traditional media. I designed a mobile game for Nextel, AT&T, Motorola and Nokia called “Wasabi Run” and developed “Wasabi Warriors”, a companion 2d animated presentation done in Flash.

In 2012, during the stereoscopic boom, I found the project files for the short, separated out the original animation layers and brought them into After Effects to see if I could create a 3d version.

I pulled that 3d side-by-side QuickTime off an old hard drive, encoded into Apple’s MVHEVC spatial format and now I can watch it in my Vision Pro!

Unfortunately the parallax effect gets lost in this 2d capture from the headset, but I can assure you that it’s pretty cool to see these giant cartoon characters performing in my living room… more than 20 years later!!!

Friday afternoon hack… SwiftUI controlling HUE apil.

I’ve been wanting to try creating some art directed destruction using Meta’s XR Scene Actor in combination with Unreal Engine Chaos Physics. I incorporated an AI navigation/behavior system to get enemies to track my location and used an experimental UE5 feature to tick the physics simulation on an async thread to get reasonable-ish performance for this demo.

I ran a quick comparison of Quest Pro and Quest 3 body and face tracking using metahuman rigs. I did some reading up on Meta’s Movement SDK and realized that there was a live link metahuman retargeting component. The documentation for setup is pretty thin but following along with their live link body example I discovered you can easily add a face and eye LL source to access that data.

Overall the face tracking on the QuestPro is pretty satisfying and works surprisingly well right out of the box with metahuman rigs. The Quest3 inside out body tracking feels better than the QP and is noticeably more responsive on Q3.

Realtime mocap previz in XR. Xsens body data is being recorded (for later post processing) in MVN and simultaneously streaming over Wi-Fi (via Live Link) to Unreal and the packaged Android XR app.

iPhone is recording MetaHuman Animator files for high fidelity facial performance solving/retargeting.

iPad is streaming Live Link ARKit Face over Wi-Fi to Unreal and to the packaged Android XR app.

Unreal is recording the previz (the combined realtime face and body performance) in Sequencer as seperate takes for a quick editorial turnaround.

Tentacle timecode is feeding Xsens/iPhone/Unreal.

Happy New Year! From my family to yours… complete with a Gaussian Splat in WebXR. Follow the QRCode on your phone.

Fishing for a good book in VR:

Migrated my digitaltwin project to v57 of the metaXR plugin… just in time for the release of v59. 😂

Testing passthrough with collisions from the global scene mesh generated by room setup. Control rig foot-IK coming from refactored ALS.

Working out a dual boss battle mode in verse.

After spending this past weekend thinking about splats… I woke up this morning curious if I could train data from an old VHS tape. I remembered I had some footage of myself on the rooftop of my apartment in Studio City in 1989. It’s pretty cool to think we can pull these moments from old media sources and augment or immerse ourselves in them relatively easily.

Also… I had some pretty rad hair back in the day. 😂

A gaussian splat in XR…

tl;dr

I used quadjr aframe-gaussian-splatting component and WebXR to visualize a splat in mixed reality using the Quest browser.

This was a 30k iteration model that I trained on a 4090 and edited down and exported as a .ply using a Unity gaussian viewer/editor.

Compressed down to ~5mb and converted to .splat using animatter15 conversion.

Finding that smaller objects with high density data look WAY better as splats than large spaces that under scanned. Almost all splats I’ve seen or generated look great when viewed at an angle close to the original camera input reference. This was the first one I’ve made that visually holds up from all angles. Bonus to be able to pick it up and look at it close up!

Took a quick run at building a few attack loops for a boss map. Super fast down and dirty custom metahuman face and body pipeline.

Are you tough enough to BEAT THE BIG BANANA BOSS!?! 👿 🍌

Island Code: 1412-7160-7196

Here’s a Gaussian Spalling in UEFN. Really cool to see all the recent structure from motion techniques taking off! Amazed at how fast the quality is improving and how easily splats can be imported into an interactive experience using Unreal Niagra particles.

I took open source LiDAR data of Lake Hollywood and made a Fortnite map using #UEFN

For the past +35 years I’ve spent a lot of time wandering around the Hollywood Reservoir area. I know the streets and hiking trails like the back of my hand. I can see the Wisdom Tree from my house and it’s one my favorite places in the city to visit.

I was curious what a world scale island would feel like in Fortnite and was pleasantly surprised at how accurate and familiar the topology is. I used a height map from the USGS website, did some math, threw on a landscape material, painted foliage and dropped in a few assets from the Fab marketplace.

The fun part was trying to build in a boss battle using a multiplayer game manager that tracks players, controls a synced UI and triggers the game loop from timers.

I call it… 🐍 SnakeHollywood! 🐍

Check it out and let me know what you think!

The devil is in the details…

For this test I used a custom metahuman to record body and facial animation over livelink. The facial animation was automatically generated from a text file that was fed into a speech synthesis program and solved by Nvidia’s audio2face (as it streamed, over livelink) into Epic Game’s unreal engine where it was combined (in real-time) with body motion from Sony’s Mocopi system. Once the recording in sequencer was completed, the data was exported to Fortnite Creative using the UEFN toolset.

Like most technology related things, you have to consider your use case… It’s not going to replace high-end inertia suits or optical volumes anytime soon, but a hefty step forward for UGC and #XR Avatars.

Impressive real-time output from a $500 system! Super easy setup, comfortable to wear, nicely designed. Easy live-link integration into Unreal with solid retargeting to #metahuman skeletons using UE5 IK control rigs. Absolutely can see how this could fit into an appropriate performance capture pipeline immediately.

#mocopi#motioncapture#unrealengine

Metahumans in UEFN…

This weekend I spent a little time messing around with #UEFN, I was curious about the pipeline for migrating assets from #UE5 into #Fortnite. I was especially curious to see if it was possible to bring in #Metahumans.

Unfortunately the Fortnite editor doesn’t currently support morphs for skeletal meshes, so I tried parenting the source face rig to the body in Maya and then baking animation controls to the joints and was pleasantly surprised to see how easy it was!

Really opened my eyes to the possibilities for bringing in high quality facial and body animation into Fortnite Creative!

AND… I also had to see if I could bring over my virtual home, which at this point has become my metaverse-teapot. 🙂

You can check it out using this Island Code –> 7373-5242-3130

Live Metahumans – Pixel Streaming / WebXR / CoreWeave

An attempt to live stream metahumans using Unreal Engine experimental Pixel Streaming VR on QuestPro and PC. Running off CoreWeave Cloud RTX A6000 rendering two streams @ 60fps.

Streaming live #Metahumans in #XR.

Finally figured out a way to modify the Unreal Live Link face support plugin to allow ARKit sources to be discovered by packaged Android builds. I’ve been wanting to stream live face and body performances to #QuestPro using the Metahuman Face app and #Xsens motion data. I like the idea of viewing/directing a remote performance in XR while simultaneously harnessing the power and fidelity of the Metahuman pipeline for recording and post animation work directly in Sequencer.

In the same way that “Must See TV” was a ratings driver for television, maybe this is another look at how live “appointment experiences” might evolve for XR.

A quick NeRF test in Unreal Engine using nerfstudio trained with volinga from an iPhone polycam capture. ~15 minutes from start to finish.

Trying to crack the code on TikTok; This pipeline is setup with a couple backstops that allow for animation fixes (if desired in either in Maya or Unreal), but for this TikTok experiment, I’m using a rapid workflow to specifically record live improvisational performances that can be immediately edited as video in Premiere and then conformed using an EDL with unreal’s timecoded animation in sequencer. This allows me to quickly subsequence an environment, add lighting and props and do some minor animation adjustments using the metahuman body and face rigs right in the timeline. It’s a great way to test some artificially un-intelligent humor without overthinking the process.

One of the cools things about the immersive web is the ability to switch (relatively seamlessly!) between different XR modes. I authored a three.js scene to interact with a 3d asset and parse between browser, headset (VR or AR) and Apple’s Quick Look (converting a GLB file to USDZ on the fly). Author once, deploy everywhere!

Here is a Quest Web Launch link to the scene : Do Not Sit Here!

*iOS 16 update recently broke some aspects of WebXR rendering which will make this appear black on mobile. There is a fix for this in the next update… so leaving it for now.

Testing out a Threejs/usdz scene with both WebXR *and* Apple Quick Look support.. This should load in AR on iOS devices by clicking the Apple Reality icon in the embedded Threejs scene… if you have a connected WebXR headset you should be able to spawn the character in AR/passthrough. I can’t wait until Apple starts supporting morphs targets within glTF files.

*iOS 16 update recently broke some aspects of WebXR rendering which will make this appear black on mobile. There is a fix for this in the next update… so leaving it for now.

Extending my WebXR knowledge with some basic VR teleportation mechanics.

Testing WebXR functionality. Using three.js to learn how GLTF importers work. Added camera controls that dynamically follow the head bone on an imported skeleton mesh. The AR version includes passthrough and should work for both Quest and Quest Pro. Haven’t tested extensively, but excited about the potential for this platform.

Using the quest browser to pixel stream the ue5 editor. Live link mocap data streaming into level while using unreal’s remote control. Custom OSC websockets to trigger OBS recording.

Testing the ability to take real-time Live Link motion capture data from unreal into a packaged Android build on the questpro. I wanted to try combing the face and eye tracking components meta supplies as part their movement SDK. I had had hoped to use their finger tracking, but it’s tied to the body tracking component and unfortunately there didn’t seem to be an easy way to set this up for my use case. The real-time live link motion data requires the use of animation blueprints and the movement sdk body tracking is exclusively skeletal mesh based. I’m sure this could be extended in the engine source in the future. I added a simple input on the motion control to swap skeletal meshes at runtime so I could see myself as different characters on the fly. This kind of visual feedback you get as a performer far surpasses this “magic mirror” that I built for the actors on Meet The Voxels.

Testing out body, face and eye tracking on the questpro in unreal using my head/hand meshes from an ARKit rig I had setup a few years ago. Most of the blends required are also available in ARKit (with different naming) and so this worked pretty much out of the box. The unreal examples meta provides aren’t that inspired and so I ripped the assets from the unity GitHub example to better understand how they are setting up their rigs.

Taking the XR approach to digital twins a little further. I tested more questpro color passthrough interactions to expand on the idea of blending the real and the virtual.

Going down the VR passthrough rabbit hole with the questpro, I wanted to see what it would be like to overlay the digital twin of my house and then reveal it by wiping away the virtual space. I was surprised at how well this worked along with the relative accuracy of my modeling skills that I had built up over the pandemic. 😉

The first thing I wanted to do with the questpro was to test color passthrough with the idea of streaming animation live in my living room. This is a little test I packaged for android out of unreal using some motion data I had captured a few months back.

Experimenting with a way to record camera and live-link mocap in vr with the help of my wife, daughter and unrealengine !

Since I don’t have access to an optical volume to do proper virtual camera work, I set up a system in Unreal Engine to record my camera data while streaming live link performance capture to my quest. Added a couple of input bindings to my motion controllers so I can quickly calibrate performers, and easily start and stop sequencer. Surprisingly I experienced very little latency, which is pretty amazing considering how saturated the network was with xsens, live-link, multiple ndi senders and receivers and the onboard recording of the HMD output.

Render is ue5 and obviously no cleanup on the mocap.

Auditioning Metahumans is as easy as CTRL-C and CTRL-V!

There are so many things that I’m blown away by when it comes to working with Metahumans… too many to list here. I’ve done a lot of retargeting of body animation between rigs over the years, but copying and pasting facial performances across Epic’s face control rig feels like I’m working in a word processing program from the 80’s… and it’s next level cool.

Leveraging UE5 to test Google’s Immersive Stream for XR platform (AR and web browser) utilizing a little homebrew full-body and facial performance capture. Looking forward to an Open XR version of this tech for HMDs. 😉

Been working on a real-time previz pipeline in Unreal using Live Link Face and Xsens. This motion and face data has not been cleaned up. The body is recorded directly in MVN, HD processed and retargeted with skeletal dimensions that match the character. Face is processed from the calibrated Live Link CSV curves recorded on iPhone. Switchboard running through a Multiuser Session triggers takes in Sequencer, MVN and Live Link Face App.

Google Cloud stream is running off of a Linux version of UE 4.27.2 and the Vulkan API.

Looking at ways to take metahuman performance capture from unreal into a traditional maya based animation pipeline, specifically for body and face cleanup. This video shows raw motion that has been retargeted to several metahuman assets. The face and body controls from Unreal and have been imported and hooked up to the source assets in Maya. There have been no modifications to the data in Maya, I’m just illustrating how a round trip would theoretically work in a production environment. Advanced Skeleton control rig is driving the body using motion capture retargeting and metahuman face controls are available for tweaking the facial performance in Maya.

The new mesh to metahuman tool in UE5 is insane! If you’ve spent anytime pushing a scan through a wrap, maya, ue workflow to create custom metahumans… then we can agree this plugin is magic!

Immersive virtual memories are already a thing! My daughter traveled back in time to revisit her bedroom from when she was 8 years old. 🙂

tl;dr: Several years ago I took photogrammetry reference of my daughters room. I never really considered I was capturing the details and preserving a memory for her for years to come.

It’s interesting to contemplate how today’s photogrammetry is likely the equivalent of those grainy 2d photos from our childhoods. It’s not hard to imagine that as technology advances the quality and resolution of these spatial memories will greatly improve in much the same way as today’s 12MP smartphone cameras dwarf our parents Instamatics.

When experienced in virtual reality these immersive moments can be “relived” to some extent. The idea of being able to go back in time and visit my childhood bedroom to check out my C64 setup would almost feel like time traveling. I’m excited for this digital generation of kids and all the cool things that they are already getting to experience.

I tried streaming my full body avatar into an asymmetric VR experience alongside my daughter. A little latency mostly as a result of multiple NDI streams being recorded simultaneously on VR host machine. Enabled basic physics on skeletal mesh to allow for collision between VR player/environment and the streaming avatar. The big take away was that my daughter spent most the session climbing up and down on me and sticking her head into my mesh to see the backside of my eyeballs! 😂

Thought I would explore live-streaming full-body and facial performance in Unreal. I used an Xsens suit configured to stream over my home WiFi. I created an OSC interface on an iPad to control cameras and trigger OBS records. NDI streaming a live camera feed into the game environment. Additionally I was testing to see how accurate my one-to-one virtual space is with the real world.

This year, for obvious reasons, the Girl Scouts are doing virtual cookie sales… so my daughter and I created her pitch in VR! I used Unreal Engine and leveraged the open-source VRE plugin for most of the heavy lifting to create a multiplayer version of our home running off a listen server over LAN. I packaged a build that runs on Quest2 replicating OVRlipsync for lip-flap; NDI to source live video feed in game; OBS to collect and record all NDI streams.

A little VR house tour WIP:

I set-up an Unreal Engine LiveLink OSC controller to record real-time facial performance. Using the OSC messaging protocol to communicate between ue4 editor and livelink face app. I can sync slates and start/stop takes simultaneously in sequencer and on the device. I added some simple multi-cam camera switching and lens control for illustration purposes. A couple extra calls to hide/show meshes and swap textures. I’m using a python script to grab OSC messages in OBS to record video previews. There is a little lag in the OBS records but the frame rate in the ue4 editor is ridiculously fast.

On a machine learning quest to build custom training models for tracking and detection… I’m really blown away with how robust Mediapipe is out of the box. These ready to use open source solutions Google has provided to do cross-platform ML inference on mobile/desktop gpu’s have inspired me to ponder some cool use cases…

I took my daughter out for a bike ride to teach her about GPU-accelerated realtime object detection. 🙂

Frist attempt at creating a DeepFake. Source data consisted of 1500 images extracted from a 3 minute video I shot of myself talking. Trained the model for approximately 12 hours (100k iterations) against the destination video of an audition that my wife had recorded. Some obvious improvements would be adding more source data covering a wider range of poses and eye directions, more training time/iterations, a post comp pass for better color matching.

Driving my DIY photogrammetry head with an iPhone in UE4 in real-time:

My wife shaved my head with the dog clippers, my 10 year old captured me on the iPhone @ 4K/60p, metashape solved the dense cloud/mesh/texture and wrap3d to retopologize for good uvs and blendshape creation in Maya.

Testing collision with physics-based grips using the VRE plugin for Unreal Engine:

A couple weeks into the Pandemic two things emerged; a messy house and my attempt at creating a room-scale version of it: